6 Inner Products Spaces

6.1 Inner Products and Norms

Definition

Definition: Inner product [core]

Let

. for . where the bar denotes complex conjugation. if

Note that:

- The 3rd one reduces to

if - The 1st and 2nd parts simply require that the inner product be linear in the first component, (and conjugate linear in the second component).

- It's easily shown that if

and , then

The idea of distance or length is missing. Therefore we need a richer structure, the so-called inner product space structure, by adding a new inner product function.

Example 6.1.1

Example 1 For

The verification that

Thus, for

The inner product in Example 1 is called the standard inner product on

Example 6.1.2

If

Example 6.1.3

Let

- Since the proceding integral is linear in

, the 1st and the 2nd parts are immediate. - the 3rd one is trival (real-value).

- If

, then is bounded away from zero on some subinterval of (continuity is used here), and hence .

conjugate transpose

Definition: conjugate transpose

Let

Example 6.1.4

If

then

Notice that:

- if

are viewed as column vector in , then . - The conjugate transpose of a matrix plays a very important role in the remainder of this chapter. In the case that

has only real entries, is simply the transpose of .

Example 6.1.5

Let

Also

Now if

inner product space

The inner product on

A vector space

For the remainder of this chapter,

are inner products on the vector space

A very important inner product space that resembles

Show that the vector space

Check the condition one by one.

At this point, we mention a few facts about integration of complex-valued functions.

- the imaginary number

can be treated as a constant under the integration sign. - every complex-valued function

may be written as , where and are real-valued functions. Thus we have

Theorem 6.1

Let

. . . if and only if . - If

for all , then .

The 1st and 2nd of Theorem 6.1 show that the inner product is conjugate linear in the second component.

Norms

Definition: Norm / Length

Let

Example 6.1.6

Let

is the Euclidean definition of length. Note that if

Theorem 6.2

Theorem 6.2

Let

. if and only if . In any case, . - (Cauchy-Schwarz Inequality)

. - (Triangle Inequality)

.

Example 6.1.7

For

and

Orthogonal [core]

Definitions: orthogonal & orthonormal

Let

A subset

Note that if

Example 6.1.8

In

Example 6.1.9

Recall the inner product space

For what follows,

Now define

Also,

In other words,

6.2 The Gram-Schmidt Orthogonalization Process and Orthogonal Complements

Orthonormal basis

Definition: orthonormal basis

Let

Example 6.2.1

The standard ordered basis for

Example 6.2.2

The set

Theorem 6.3

Theorem 6.3

Let

where

Corollary of Theorem 6.3

Corollary

- If, in addition to the hypotheses of Theorem 6.3,

is orthonormal and , then

- Let

be an inner product space, and let be an orthogonal subset of consisting of nonzero vectors. Then is linearly independent.

For Corollary 1: If

Example 6.2.3

By Corollary 2, the orthonormal set

obtained in Example 6.1.8 is an orthonormal basis for

As a check, we have

Gram-Schmidt process

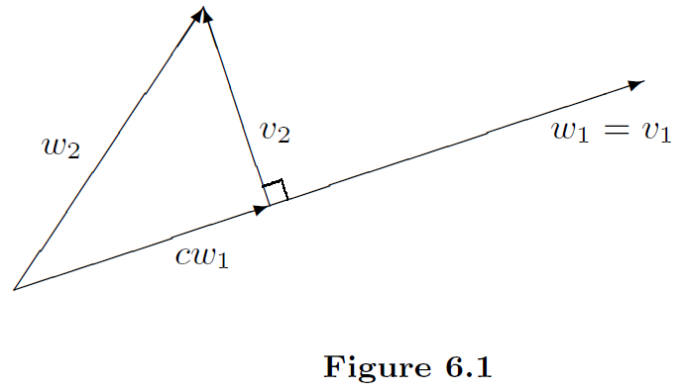

Before stating this theorem, let us consider a simple case. Suppose that

Figure 6.1 suggests that the set

So

Thus

Theorem 6.4: Gram-Schmidt process [core]

Let

Then

This construction of

Example 6.2.4 [core]

In

Take

Finally,

Normalization yields the orthonormal basis

Example 6.2.5

Let

and consider the subspace

Take

Furthermore,

Therefore

We conclude that

To obtain an orthonormal basis, we normalize

and similarly,

Thus

If we continue applying the Gram-Schmidt orthogonalization process to the basis

Theorem 6.5

Theorem 6.5

Let

Example 6.2.6

We use Theorem 6.5 to represent the polynomial

Therefore,

Corollary of Theorem 6.5

Corollary

Let

Fourier coefficients [core]

Definition: Fourier coefficients

Let

Example 6.2.7

Let

and for

Orthogonal complement

Definition: orthogonal complement

Let

The set

Example 6.2.8

The reader should verify that

Example 6.2.9

If

Let

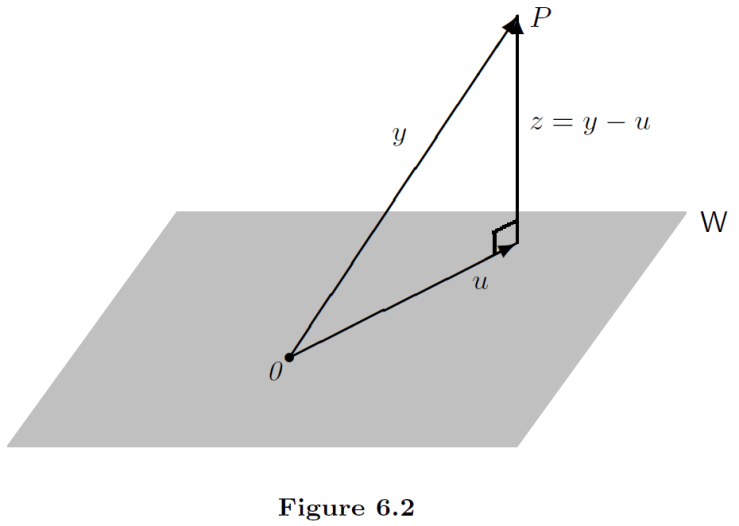

We may think of

Consider the problem in

Determine the vector

Orthogonal projection

Theorem 6.6

Let

P.S. From Corollary 1 of Theorem 6.3:

Corollary

In the notation of Theorem 6.6, the vector

The vector

Example 6.2.10

Let

We compute the orthogonal projection

By Example 6.2.5,

Computing inner products:

Hence

Theorem 6.7

Theorem 6.7

Suppose that

can be extended to an orthonormal basis for . If

, then

is an orthonormal basis for

- If

is any subspace of , then

Example 6.2.11

Let

So