Functions and Linear Transformations and Matrices

Addendic B: Functions

function

If

If

Finally, two functions

Example B.1

Suppose that

Then

one-to-one, onto

As example 1 shows, the preimage of an element in the range need not be unique. Functions s.t. each element of the range has a unique preimage are called one-to-one (injective); that is

If

one-to-one + onto = bijective

Let

- For example, let

, and . Then , whereas . Hence, . - Functionalcomposition is associative, i.e. if

is another function, then .

A function

The following facts about invertible functions are easily proved:

- If

is invertible, then is invertible, and . - If

and ar invertible, then is invertible, and .

2.1 Linear Transformations, Null Spaces, and Ranges

Linear Transformation

Now it's natural to consider those functions defined on vector spaces that in some sense "preserve" the structure.

Definition: linear transformation

Let

(additivity) (scaling)

Often simply all

Properties

- If

is linear, then ; is linear iff and ; (Generally used to prove that a given transformation is linear)[core] - If

is linear, then ; is linear iff for and , we have

Example 1

Define

To show that

we have

Also

So

Example 2: rotation

For any angle

Then

We determine an explicit formula for

Finally, observe that this same formula is valid for

Example 3&4: Reflection

Define

Define

Example 5: Polynominal

Define

So by property 2 above,

Example 6: integration

Let

for all

Special transformations

For vector spaces

We now turn our attention to two very important sets associated with linear transformations: the range and null space. The determination of these sets allows us to examine more closely the intrinsic properties of a linear transformation.

Definitions: null space/kernel; range / image [core]

Let

We define the range (or image)

Example 7: identity and zero transformation

Let

Example 8

Let

It's easy to verify that:

Theorem 2.1

Let

Theorem 2.2

Let

Recall Theorem 1.5 in §1 that the relation between subspace and span.

Example 9

Define the linear transformation

Since

Thus, we have found a basis for

Definition and Theorem

As in §1.6, we measure the "size" of a subspace by its dimension. The null space and range are so important that we attach special names to their respective dimensions.

Definition: nulity; rank [core]

Let

- Nullity of

, denoted , as the dimension of . - Rank of

, denoted , as the dimension of .

Theorem 2.3: Dimension Theorem [core]

Let

Theorem 2.4: Null Space and One-to-one [core]

Let

Theorem 2.5 [core]

Let

is one-to-one. is onto. .

Example 10

Let

Now

- Since

is linear independent, The rank is ; - Since

is not onto; - From the dimension theorem,

, so nullity is , and therefore .

So

Example 11

Let

It's easy to see that

Example 12

Let

Clearly

is linearly independent in

Theorem 2.6

Theorem 2.6

Let

Corollary

Let

Example 13

Let

and suppose that

2.2 The Matrix Representation of a Linear Transformations

coordinate vector

Definition: ordered basis

Let

Example 2.2.1

In

For the vector space

Definition: coordinate vector [core]

Let

We define the coordinate vecotr of

Example 2.2.2: coordinate vector for polynominal

Let

matrix representation

Definition: matrix representation [core]

Using the notation above, we call the

If

Notice that the jth column of

Example 2.2.3: matrix representation for tuples

Let

Let

and

Hence

If we let

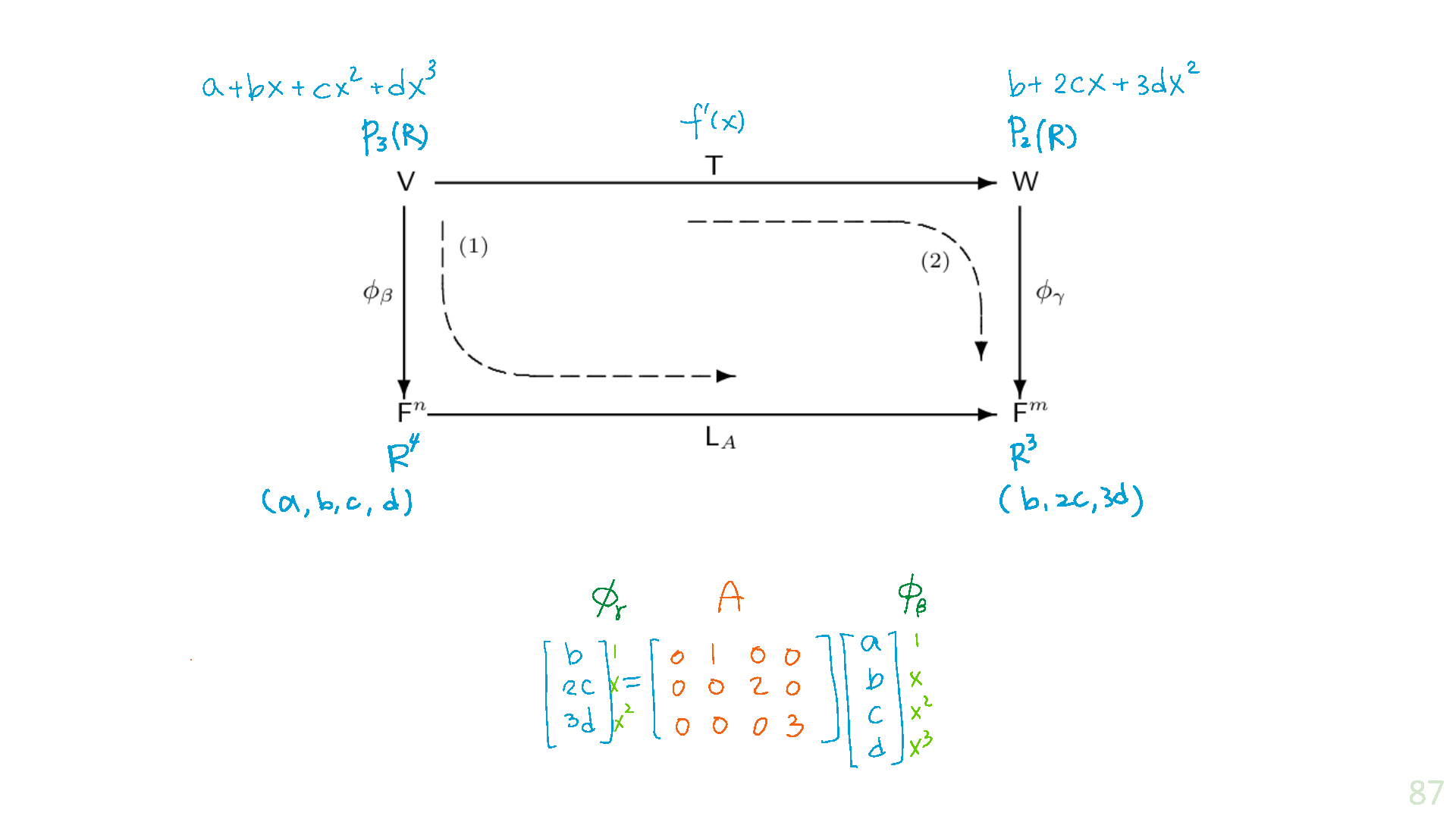

Example 2.2.4: matrix representation for polynominal

Let

So

i.e.

Note that when

operations on linear transformation

Definition

Let

by for all ; by for all ;

Theorem 2.7

Let

- For all

is linear. - Using the operations of addition andscalar multiplication in the precedingg definition, the collection of all linear transformation from

to is a vector space over .

Definition

Let

Theorem 2.8

Let

for all scalars .

Example 2.2.5

Let

Let

(as completed in Example 2.2.3), and

If we compute

So

which is simply

2.3 Composition of Linear Transformations and Matrix Multiplication

matrix product

Theorem 2.9

Let

Theorem 2.10

Let

and for all scalars .

A more general result holds for linear transformations that have domains unequal to their codomains.

Definition: product

Let

where

This computation motivates the following definition of matrix multiplication.

why matrix multiplication is defined this way - it perfectly describes the composition of basis transformations. It's not an arbitrary definition, but rather a natural consequence of how basis transformations compose.

Definition: product of Matrix

Let

Example 2.3.1

We have

Notice again the symbolic relationship

As in the case with composition of functions, we have the matrix multiplication is not commutative. It need not be true that

transpose

The transpose

show that if

and

Therefore the transpose of a product is the product of the transposes in the opposite order.

Immediate consequence of our definition of matrix multiplication:

Theorem 2.11 [core]

Let

Corollary

Let

Example 2.3.2 [core]

Let

Let

The preceding

Definition and theorem

Definition: identity matrix

We define the Kronecker delta

Theorem 2.12.

Let

and . for any scalar . . - If

is an -dimensional vector space with an ordered basis , then .

Corollary.

Let

and

For an

With this notation, we see that if

then

Theorem 2.13

Let

, where is the jth standard vector over .

Theorem 2.14 [core]

Let

Example 2.3.3

Let

We illustrate Theorem 2.14 by verifying that

but also

left-multiplication transformation

Definition: left-multiplication transformation

Let

Example 2.3.4

Let

Then

then

Theorem 2.15

Let

. if and only if . and for all . - If

is linear, then there exists a unique matrix such that . In fact, . - If

is an matrix, then . - If

, then .

Theorem 2.16: Associative in Matrix Multiplication

Let

2.4 Invertibility and Isomorphisms

inverse

Definition: inverse

Let

The following facts hold for invertible functions

. ; in particular, is invertible.

We often use the fact that a function is invertible if and only if it is both one-to-one and onto. We can therefore restate Theorem 2.5 as follows.

- Let

be a linear transformation, where and are finite-dimensional spaces of equal dimension. Then is invertible if and only if .

Example 2.4.1

Let

Theorem

Theorem 2.17

Let

Definition: invertible

Let

If A is invertible, then the matrix B such that

Example 2.4.2

The reader should verify that the inverse of

dimension

Lemma

Let

Theorem 2.18: Judgement if invertible [core]

Let

Example 2.4.3

Let

For

It can be verified by matrix multiplication that each matrix is the inverse of the other.

Corollary 1

Let

Corollary 2

Let

isomorphism

Definitions: isomorphism

Let

Example 2.4.4

Define

Example 2.4.5

Define

It is easily verified that

Moreover, since

*orphism

a homomorphism preserves the structure, and some types of homomorphisms are:

- Epimorphism: a homomorphism that is surjectiv (AKA onto)

- Monomorphism: a homomorphism that is injective (AKA one-to-one, 1-1, or univalent)

- Isomorphism: a homomorphism that is bijective (AKA 1-1 and onto); isomorphic objects are equivalent, but perhaps defined in different ways

- Endomorphism: a homomorphism from an object to itself

- Automorphism: a bijective endomorphism (an isomorphism from an object onto itself, essentially just a re-labeling of elements)

Theorem about isomorphism

Theorem 2.19

Let

Corollary

Let

Theorem 2.20

Let

Corollary

Definition: standard representation

Let

Example 2.4.6

Let

We observed arlier that

Theorem 2.21

For any finite-dimensional vector space

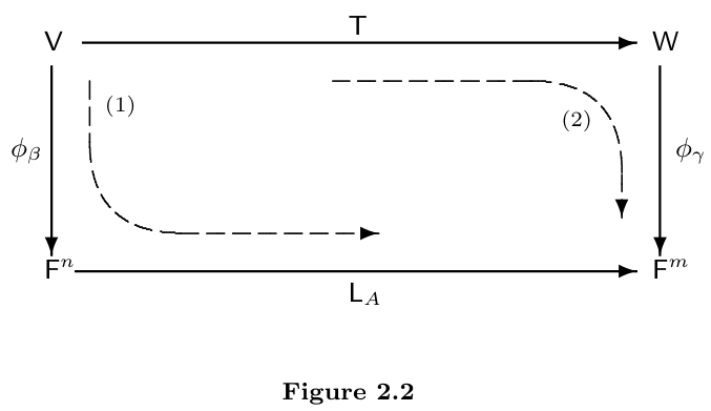

Relationship

Let

Consider the following two composites of linear transformations that map

Map

into with and follow this transformation with ; this yields the composite . Map

into with and follow it by to obtain the composite .

These 2 composites are depictedby the dashed arrows in the diagram. By a simple reformulation of Theorem 2.14, we may conclude that

(

That is, the diagram "commutes." Heuristically, this relationship indicates that after

Example 2.4.7

Recall the linear transformation

If

Consider the polynomial

But since

So

2.5 The Change of Coordinate Matrix

Theorem 2.22

Let

is invertible. - For any

The matrix

for

Example 2.5.1

In

Since

the change of coordinate matrix

For instance,

For the remainder of this section, we consider only linear transformations that map a vector space

Theorem 2.23 [core]

Theorem 2.23

Let

Proof:

Example 2.5.2: Calculation about coordination change

Let

Let

be the ordered bases. It can be known that

(Here gives details about the result above)

Given that

And

So

The change of coordinate matrix that changes

and

hence, by theorem 2.23:

To show that this's the correct matrix, we can verify that the image under

Note that the coeffficients of the linear combination are the entries of the 2nd column of

Example 2.5.3: Application about coordination change [core]

Recall the reflection about the x-axis in Example 3 in Section 2.1. The rule

Therefore if we let

Then the matrix representing

Let

and

Then it can be verified that

Since

Corollary of Theorem 2.23

Corollary

Let

where

Example 2.5.4

Let

and let

which is an ordered basis for

So by the preceding corollary,

Definition

Let