5 Eigenvalues and Eigenvectors

5.1 Eigenvalues and Eigenvectors

Definition and Theorem

This chapter is concerned with the so-called diagonalization problem. For a given linear operator

- Does there exist an ordered basis

for such that is a diagonal matrix? - If such a basis exists, how can it be found?

Definitions: diagonalizable

A linear operator

Definitions: eigenvalue & eigenvector

Let

Let

Theorem 5.1

A linear operator

Furthermore, if

Corollary

A matrix

Example 5.1.1

Let

Since

and so

then

Example 5.1.2

Let

It is clear geometrically that for any nonzero vector

While in the field of complex number

For basis

Let

Then

Example 5.1.3

Let

Let

Suppose

(Here, the function

Theorem 5.2

Let

Definition: characteristic polynomial (For matrix)

The polynomial

Definition: characteristic polynomial (For linear transformation)

Let

Example 5.1.4

To find the eigenvalues of

we compute its characteristic polynomial:

Hence, the only eigenvalues of

Example 5.1.5

Let

let

(Explanation:

)

The characteristic polynomial of

Hence

Theorem 5.3

Let

- The characteristic polynomial of

is a polynomial of degree with leading coefficient . has at most distinct eigenvalues.

Theorem 5.4

Let

Example 5.1.6 [core]

To find all eigenvectors of the matrix

recall

A vector

Clearly the set of all solutions to this equation is

Now, suppose

Hence

Thus

Observe that

is a basis for

Suppose that

The diagonal entries of this matrix are the eigenvalues of

To find the eigenvectors of a linear operator

Now

An equivalent formulation of the result discussed in the preceding paragraph is that for an eigenvalue

We can choose to solve the problem using whichever path is easier.

Example 5.1.7

Let

We consider each eigenvalue separately. Let

Then

is an eigenvector corresponding to

Notice that this system has three unknowns,

for

for any

Next let

It is easily verified that

and hence the eigenvectors of

for

Since

the eigenvectors of

for

For each eigenvalue, select the corresponding eigenvector with

5.2 Diagonalizability

Theorem 5.5 and Corollary

What is still needed is a simple test to determine whether an operator or a matrix can be diagonalized, as well as a method for actually finding a basis of eigenvectors.

Theorem 5.5

Let

Corollary

Let

Example 5.2.1

Let

The characteristic polynomial of

split

Definition: split over

A polynomial

For example,

If

Theorem 5.6

The characteristic polynomial of any diagonalizable linear operator splits.

From this theorem, it is clear that if

The converse of Theorem 5.6 is false; that is, the characteristic polynomial of

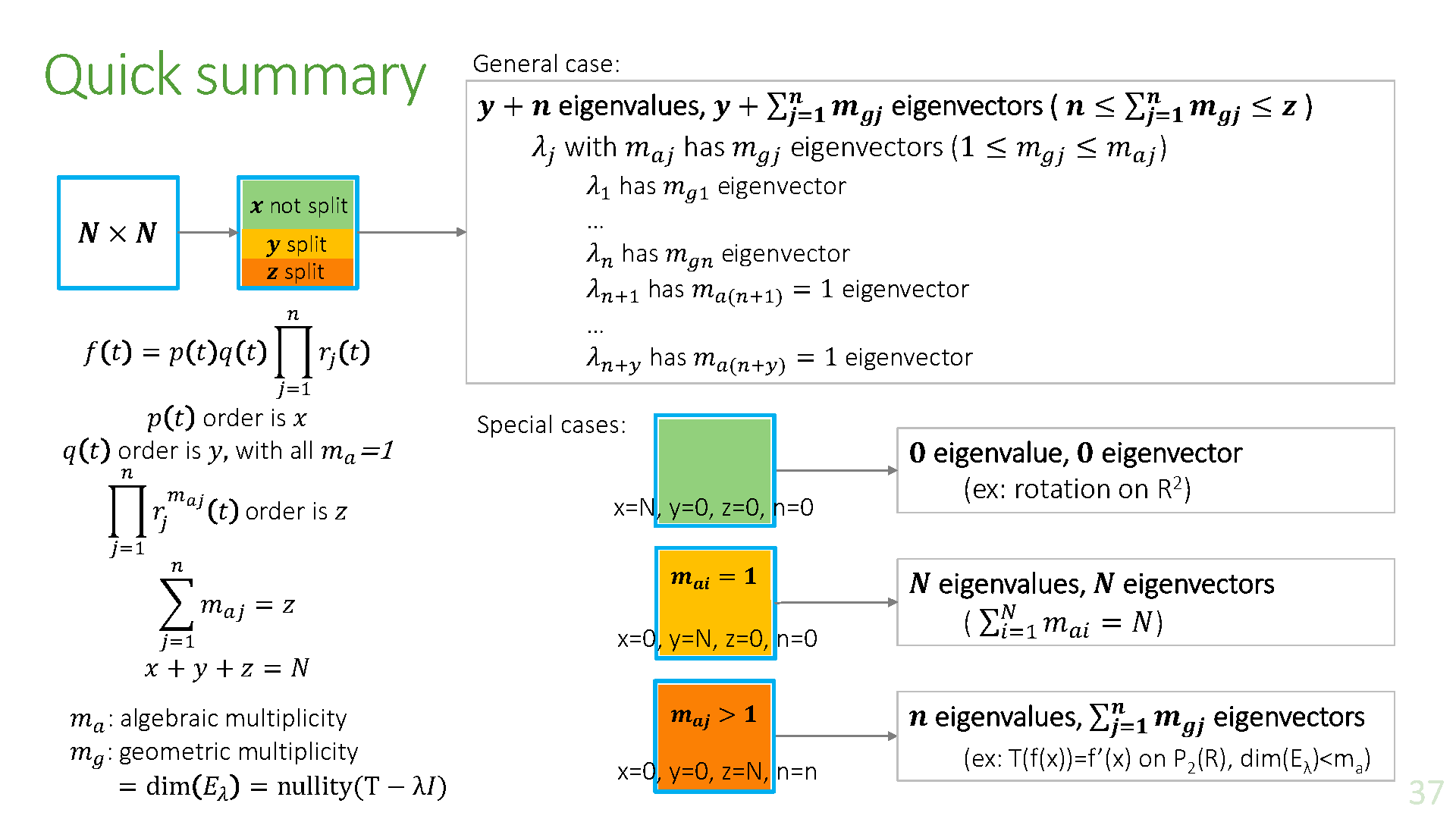

Definition: multiplicity

Let

Example 5.2.2

Let

which has characteristic polynomial

Remark

Let

The multiplicity of

The dimension of the eigenspace associated to eigenvalue

where

Therefore we have

The eigenvectors of

eigenspace

Definition: eigenspace

Let

Theorem 5.7

Let

where

Example 5.2.3

Let

Consequently, the characteristic polynomial of

Thus

A matrix is diagonalizable if and only if the algebraic multiplicity and geometric multiplicity are equal for all eigenvalues.

Example 5.2.4

Let

We determine the eigenspace of

and hence the characteristic polynomial of

So the eigenvalues of

Since

which means

Similarly,

Since the unknown

So a basis for

In this case, the multiplicity of each eigenvalue

is linearly independent and hence is a basis for

Lemma

Let

then

Theorem 5.5 says that eigenvectors which corresponds to different eigenvalues are linearly independent, so the only possibility is that they are all zeros.

Theorem 5.8

Let

Theorem 5.9

Let

is diagonalizable if and only if the algebraic multiplicity of equals for all (i.e. algebraic multiplicity = geometric multiplicity). - If

is diagonalizable and is an ordered basis for for each , then

is an ordered basis for

Test for Diagonalizability

Let

- The characteristic polynomial of

splits. - For each eigenvalue

of , the multiplicity of equals the corresponding geometrical multiplicity:

Example 5.2.5

We test the matrix

for diagonalizability.

The characteristic polynomial of

which splits and so condition 1 of the test for diagonalization is satisfied. The eigenvalues are

Since

the matrix

Example 5.2.6 [core]

Let

With

The characteristic polynomial of

which splits. Hence, condition 1 of the test for diagonalizability is satisfied.

Also,

Condition 2 is satisfied for

Checking condition 2 for

the rank of

We now find an ordered basis

- The eigenspace corresponding to

is

which is the solution space for the system

and has

as a basis.

- The eigenspace corresponding to

is

which is the solution space for the system

and has

as a basis.

Let

Then

Finally, observe that the vector in

which is an ordered basis for

Example 5.2.7

Let

We show

First observe the characteristic polynomial of

By applying the corollary to Theorem 5.5 to the operator

are bases for the eigenspaces

is an ordered basis for

be the matrix whose columns are the vectors in

To compute

Systems of Differential Equations

Exercise 5.2.8

Consider the system of differential equations

where each

Let

and

Let

be the coefficient omatrix of the given system, so that we can rewrite the system as matrix equation

It can be verified that for

we have

or equivalently,

Define

which is a system of decoupled equations

Solutions for each

with arbitrary constants

Back-substituting,

Thus the general solution is

where

This concludes the main part of the example.

Here is more generalized conclusion.

Application for ODE

Let

Suppose

Prove that a differentiable function

where

Following the step of the previous exercise, we may pick a matrix

For the second statement, we should know first that the set

are linearly independent in the space of real functions. Since

is an