3 Elementary Matrix Operations and Systems of Linear Equations

3.1 Elementary Matrix Operations and Elementary Matrices

Elementary Matrix Operations

Definitions: Elementary Matrix Operations

Let

- Interchanging any two rows [columns] of$A $;

- Multiplying any row [column] of

by a nonzero scalar; - Adding any scalar multiple of a row [column] of

to another row [column].

Any of these three operations is called an elementary operation. Elementary operations are of type 1, type 2, or type 3 depending on whether they are obtained by (1), (2), or (3).

Example 3.1.1

Let

Interchanging the second row of

Multiplying the second column of

Adding

elementary matrix

Definition: elementary matrix

An

Theorem 3.1

Let

Proof is skipped. Verifying Theorem 3.1 for each type of elementary row operation. The proof for column operations can then be obtained by using the matrix transpose to transform a column operation into a row operation.

Example 3.1.2

Consider the matrices

Note that

In the second part of Example 1,

Observe that

Theorem 3.2: inverse of elementary matrix

Elementary matrices are invertible, and the inverse of an elementary matrix is an elementary matrix of the same type.

3.2 The Rank of a Matrix and Matrix Inverses

Definition: rank

If

Theorem 3.3

Let

Every matrix

Theorem 3.4

Let

, , and therefore, .

Corollary

Let

is a subspace of ; .

Corollary

Elementary row and column operations on a matrix are rank-preserving.

Theorem 3.5

The rank of any matrix equals the maximum number of its linearly independent columns; that is, the rank of a matrix is the dimension of the subspace generated by its columns.

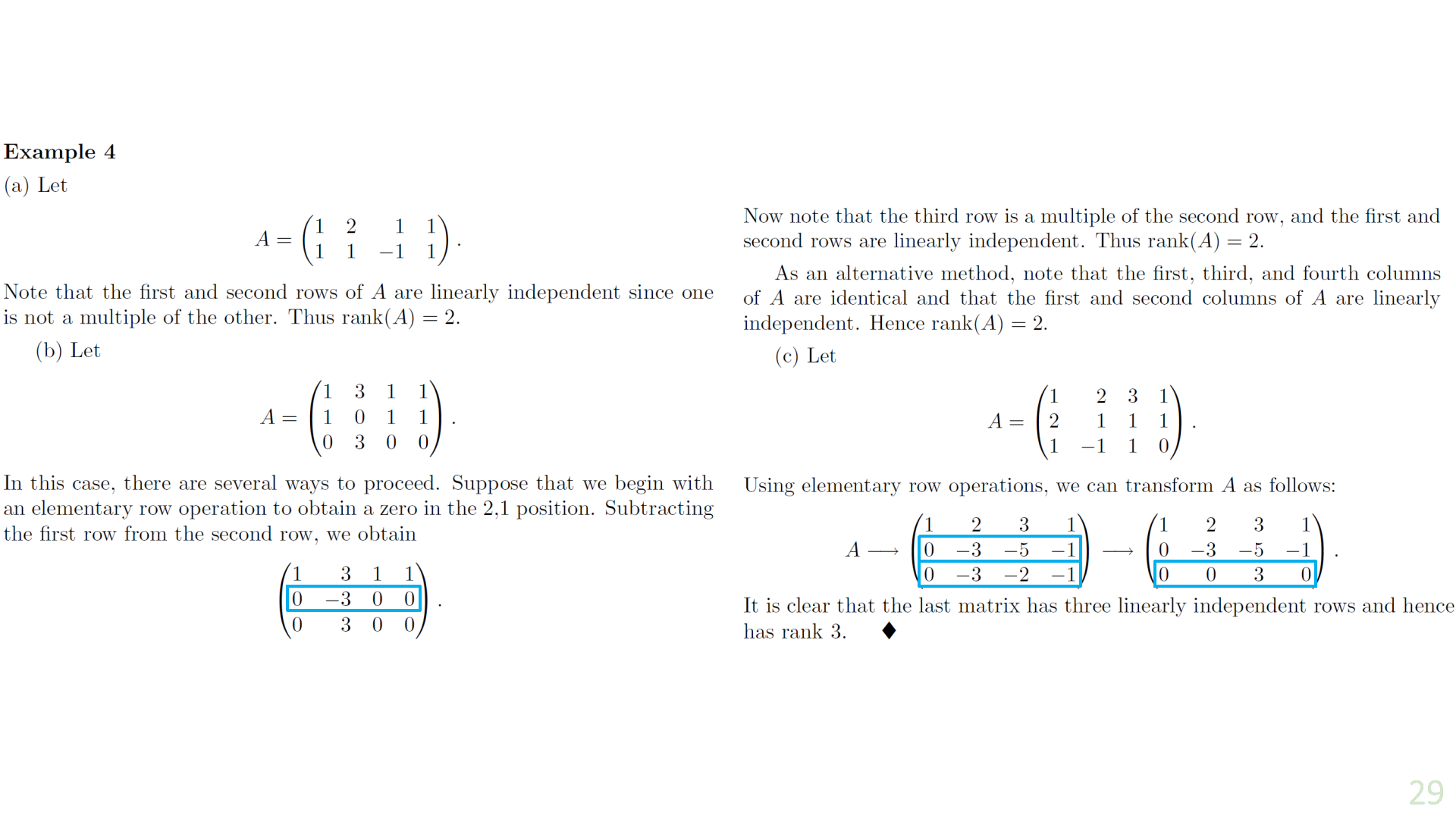

Example 3.2.1 (Rank Determination)

Let

Observe that the first and second columns of

Example 3.2.2

Let

If we substract the 1st row of

If we now substract twice the 1st column from the 2nd and substract the 1st column from the 3rd (type 3 elementary column operations), we obtain

It's now obvious that the maximum number of linearly independent columns of this matrix is

Theorem 3.6

Let

where

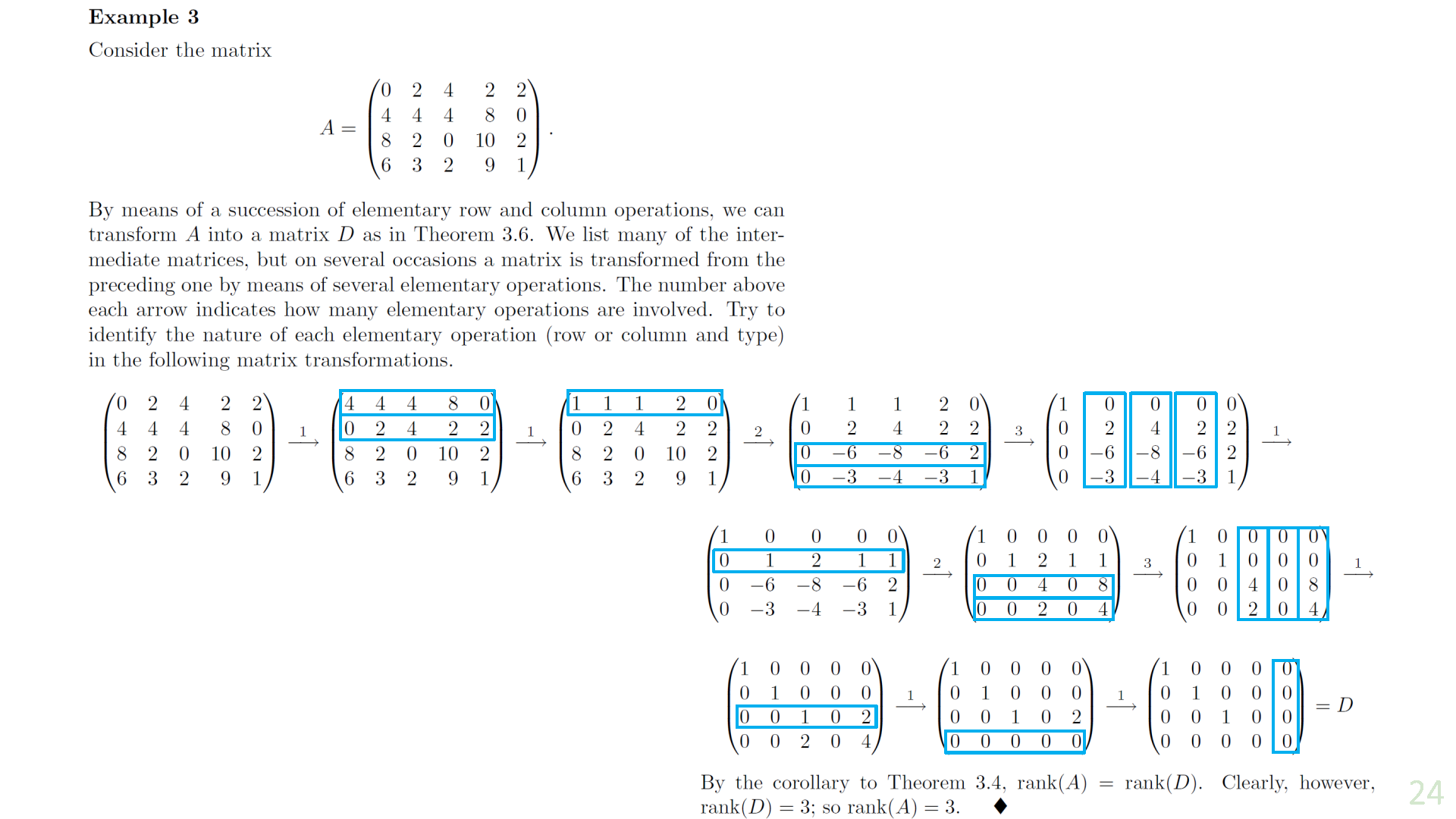

Example 3.2.3

Conclusion about rank

Corollary 1

Let

is the

Corollary 2

Let

. - The rank of any matrix equals the maximum number of its linearly independent rows; that is, the rank of a matrix is the dimension of the subspace generated by its rows.

- The rows and columns of any matrix generate subspaces of the same dimension, numerically equal to the rank of the matrix.

Corollary 3

Every invertible matrix is a product of elementary matrices

Theorem 3.7

Let

Example 3.2.4

The Inverse of a Matrix

Definition: augented matrix

Let

Let

By Corollary 3 to Theorem 3.6,

Because multiplying a matrix on the left by an elementary matrix transforms the matrix by an elementary row operation (Theorem 3.1 p. 149), we have the following result: If

Conversely, suppose that

Letting

If, on the other hand,

Example 3.2.5

Example 3.2.6

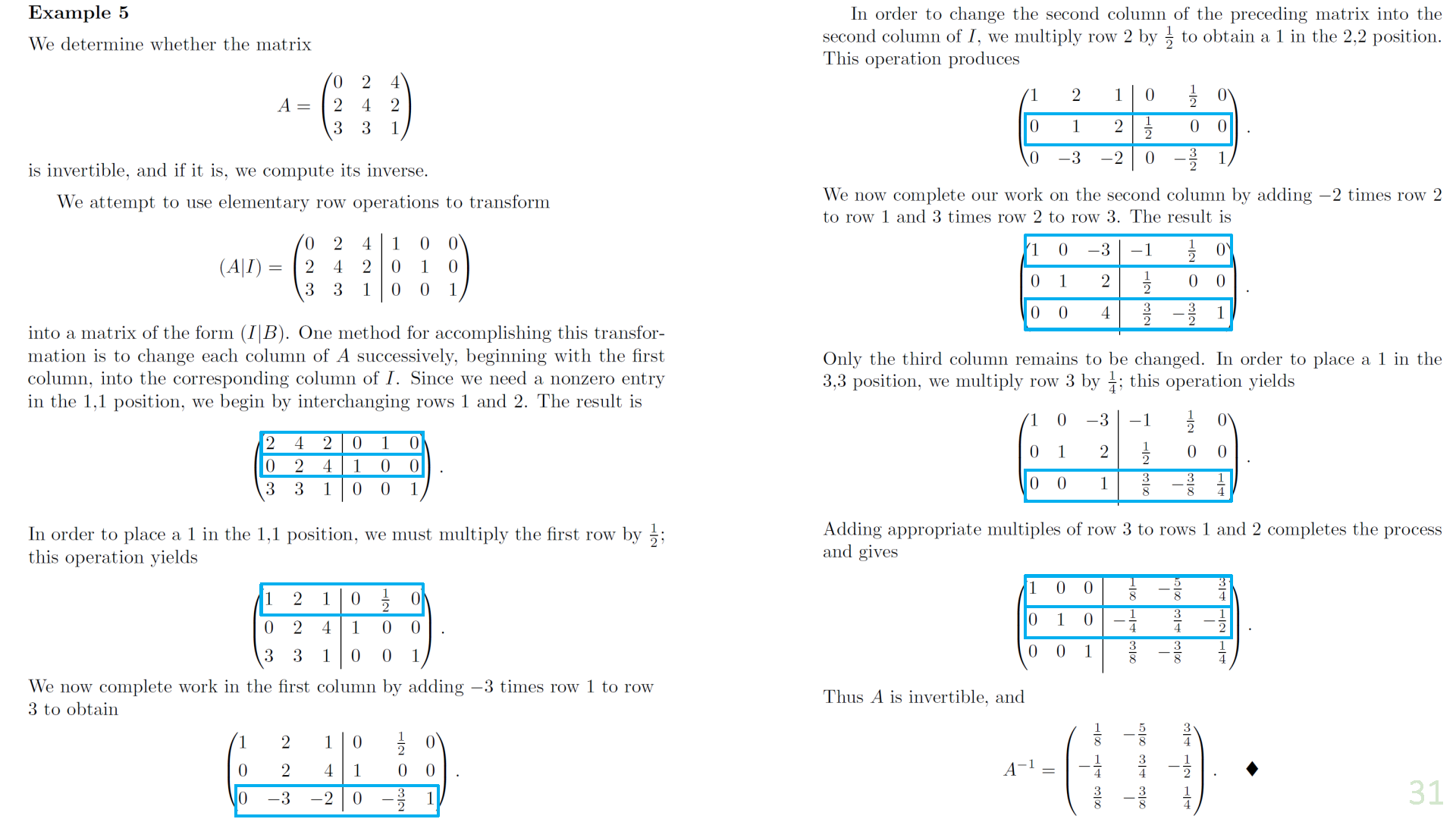

We determine whether the matrix

is invertible, and if it is, we compute its inverse. Using a strategy similar to the one used in Example 3.2.5, we attempt to use elementary row operations to transform

into a matrix of the form

is a matrix with a row whose 1st 3entries are zeros. Therefore

Example 3.2.7 [core]

Let

where

Using the method of Examples 5 and 6,

Thus

Therefore

3.3 Systems of Linear Equations - Theoretical Aspects

The system of equations

where

The

is called the coefficient matrix of the system

We can write the system as a single matrix equation

where

To exploit the results that we have developed, we often consider a system of linear equations as a single matrix equation.

A solution to the system

such that

Example 3.3.1

(a) Consider the system

The solution is unique:

In matrix form:

(b) Consider the system

which has infinitely many solutions, such as:

(c) Consider

that is

It's evident that this system has no solutions. Thus we see that a system of linear equations can have one, many, or no solutions.

homogeneous

Definitions: homogeneous

A system

Theorem 3.8

Let

Corollary

If

Example 3.3.2 [core]

(a) Consider the system

Let

be the coefficient matrix of this system. It is clear that

is a solution to the given system, it is a basis for

where

(b) Consider the system

are linearly independent vectors in

Theorem 3.9

Let

Example 3.3.3

(a) Consider the system

The corresponding homogeneous system is the system in Example 3.3.2(a). It is easily verified that

is a solution to the preceding nonhomogeneous system. So the solution set of the system is

by Theorem 3.9.

(b) Consider the system

The corresponding homogeneous system is the system in Example 3.3.2(b). Since

is a solution to the given system, the solution set

Theorem 3.10

Theorem 3.10

Let

Conversely, if the system has exactly one solution, then

Example 3.3.4

Consider the system:

Using the inverse matrix of the coefficient matrix, the unique solution is

Theorem 3.11

Theorem 3.11

Let

Example 3.3.5

Recall the system of equations

in Example 3.3.1(c). Since

Because the two ranks are unequal, the system has no solutions.

Example 3.3.6

We can use Theorem 3.11 to determine whether

We check if

Since the rank of the coefficient matrix is 2 but the augmented matrix is 3, this system has no solutions. Hence

3.4 Systems of Linear Equations - Computational Aspects

Definition: equivalent

Two systems of linear equations are called equivalent if they have the same solution set.

Theorem 3.13

Let

Corollary

If

Gaussian Elimination Method Outline:

- Form the augmented matrix

. - Use elementary row operations to transform

into reduced row echelon form. - Solve the system from the reduced form using back substitution.

We now describe a method for solving any system of linear equations.

Consider the system:

First, form the augmented matrix:

Then perform elementary row operations to transform it into an upper triangular matrix in reduced row echelon form using steps involving row interchanges, scaling, and elimination as described.

- In the leftmost nonzero column, create a 1 in the first column;

- By means of type 3 row operations, use the first row to obtain zeros in the remaining positions of the leftmost nonzero column;

- Create a 1 in the next row in the leftmost possible column, without using previous rows;

- Use type 3 elementary row operations to obtain zeros below the 1 created in the preceding step;

- Repeat 3 and 4 until no nonzero rows remain;

- Work upward, beginning with the last nonzero row, and add multiples of each row to the rows above. Repeat the process for each preceding row until it is performed with the 2nd row, at which time the reduction process is completed.

Definition: Reduced row echelon form

A matrix is said to be in reduced row echelon form if it satisfies: (a) Any row containing a nonzero entry precedes any row with all zero entries.

(b) The first nonzero entry in each row is the only nonzero entry in its column.

(c) The first nonzero entry in each row is 1 and it occurs to the right of the first nonzero entry in the preceding row.

The method described with forward elimination and back substitution is known as Gaussian elimination.

Theorem 3.14

Gaussian elimination transforms any matrix into its reduced row echelon form.

Theorem 3.15

Let

. - If the general solution is

then

Theorem 3.16

Let

(a) The number of nonzero rows in

(b) For each

(c) The columns of

(d) For each

Corollary

The reduced row echelon form of a matrix is unique.

Example 3.4.2

Let

The reduced row echelon form of

Since

Let the columns of

Example 3.4.3

The set

generates a subspace

consisting of the images of the polynomials in

is a basis for the subspace of

is a basis for the subspace

Example 3.4.4 [mid-term]

Let

It is easily verified that

is a linearly independent subset of

To extend

and assign parametric values to

Hence

is a basis for

The matrix whose columns consist of the vectors in

and its reduced row echelon form is

Thus

is a basis for